MOCHA is an automated training tool for data-driven objective assessment of robotic surgery skill.

Assessment of technical skill in robotic minimally invasive surgery (RMIS) training involves tedious evaluation by expert surgeons, which is subjective, time-consuming, and often not done in real time.

MOCHA (Multimodality Objective Coach with Haptic Augmentation) aims to address these shortcomings by providing data-driven measures of RMIS skill for surgical trainees. Using a ROS-based framework I developed, an array of sensors internal and external to the robotic platform provide data via kinematic, kinetic, and video streams to characterize trainee performance in a more direct and objective manner.

A transformer-based model is being developed to analyze these data streams and output GEARS scores for ease of interpretation by expert clinicians and trainees using the robotic platform.

In our current study, the force and acceleration signals from MOCHA drive wrist-squeezing and vibrotactile haptic feedback on BREW devices worn by surgical trainees.

The video below (4x speed) shows the endoscopic view of the surgical training task we are using in our study, as recorded by MOCHA.

Finally, here are some signals we can collect with MOCHA:

Tool-platform forces: ATI Mini40 F/T transducer housed in task platform (under suture pad).

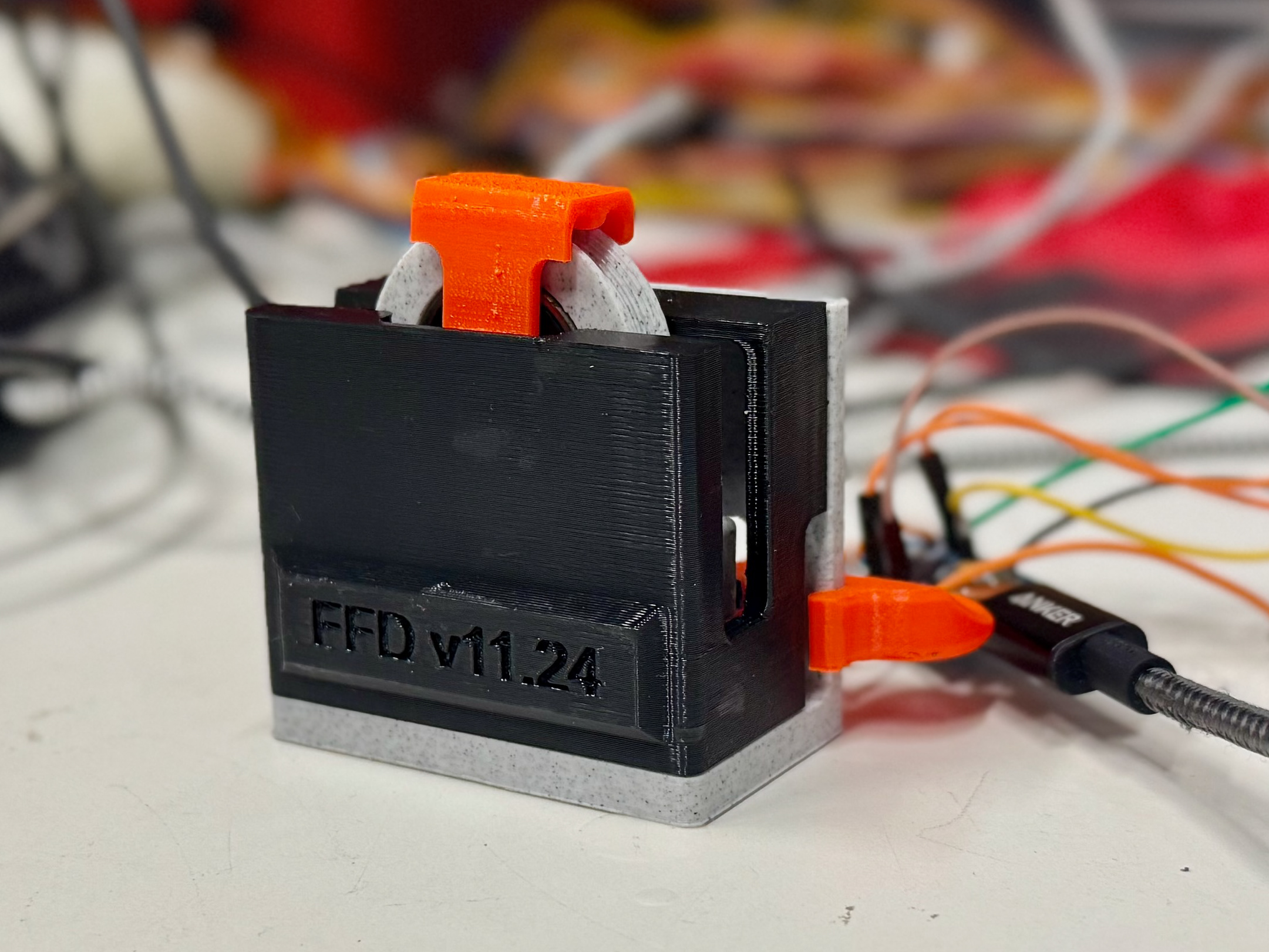

Tool-tool forces: Intuitive Surgical Large Needle Drivers equipped with ForceN force-sensing laminate.

Tool accelerations/vibrations: ADXL 345 digital accelerometers, one clipped on each da Vinci robot arm.

Stereoscopic video: from da Vinci endoscope

Tool Cartesian position: from da Vinci research API installed on clinical da Vinci Si

MTM (Master Tool Manipulator) Cartesian position: from API

Console head in/head out events: from API

...plus many more kinematic signals and event information from the research API.

Feel free to contact me with any questions.